Szpindel cleared his throat. "Try this one."

The feed showed what she saw: a small black triangle on a white background. In the next instant it shattered into a dozen identical copies, and a dozen dozen. The proliferating brood rotated around the center screen, geometric primitives ballroom-dancing in precise formation, each sprouting smaller triangles from its tips, fractalizing, rotating, evolving into an infinite, intricate tilework...

A sketchpad, I realized. An interactive eyewitness reconstruction, without the verbiage. Susan's own pattern-matching wetware reacted to what she saw— no, there were more of them; no, the orientation's wrong; yes, that's it, but bigger— and Szpindel's machine picked those reactions right out of her head and amended the display in realtime. It was a big step up from that half-assed workaround called language. The easily-impressed might have even called it mind-reading.

Watts, P. (2006). Blindsight. CC BY-NC-SA 2.5

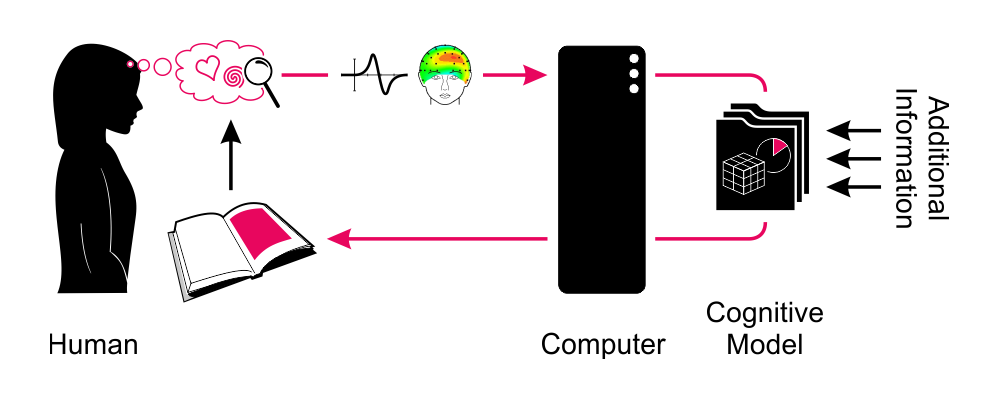

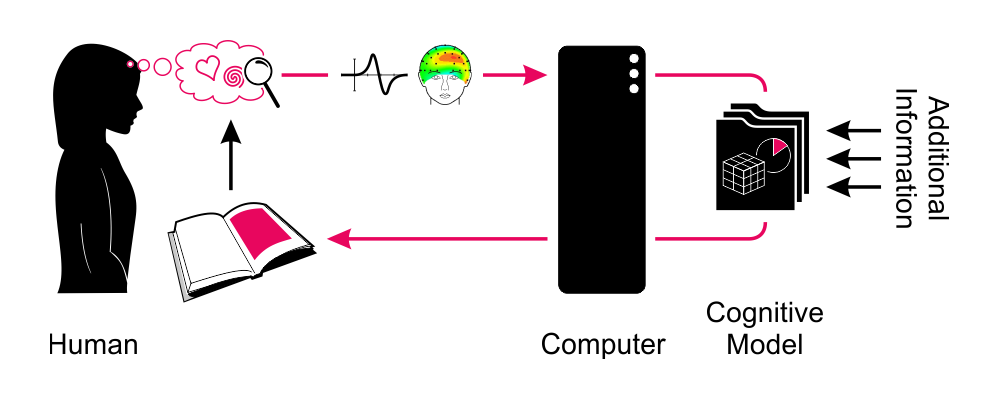

Continuously and automatically processing information is what the human brain does. When presented with something, it interprets it and reacts to it. Through passive brain-computer interfacing, it is possible to (partially) detect such automatic brain responses. It is thus possible for one and the same computer to deliberately present something to you, effectively forcing your brain to respond to it, and subsequently gauge this automatic response, in order to infer e.g. your preferences with respect to what was presented.

Imagine an electronic, neuroadaptive book, as first described here. While reading, the reader would interpret the story as it unfolded, thus automatically responding to events with a detectable cognitive and affective states. The book could thus present the reader with specific events, e.g. specific acts performed by the characters, in order to gauge the reader's preferences. Based on the reader's apparent mindset, the book could then change the content of subsequent pages. Does the reader become sad when a character experiences a significant setback? This may mean that the reader likes this character—in the next chapter, they may be up on their luck.

With a sequence of such adaptations, the story is gradually steered in a direction specifically tailored to the reader. However, the reader would not actively be directing the story, and would not even need to be aware of the system's existence.

Cognitive and affective probing can enhance human-computer interaction by granting the computer access to human intelligence. However, there are also a number of ethical concerns including consent and privacy: using cognitive probes, a computer may be able to force your brain to produce information that you did not want to reveal.

Further reading:

Krol, L. R., Haselager, P., & Zander, T. O. (2020). Cognitive and affective probing: a tutorial and review of active learning for neuroadaptive technology. Journal of Neural Engineering, 17(1), 012001. doi: DOI PDF

Krol, L. R., & Zander, T. O. (2018). Towards a conceptual framework for cognitive probing. In J. Ham, A. Spagnolli, B. Blankertz, L. Gamberini, & G. Jacucci (Eds.), Symbiotic interaction (pp. 74–78). Cham: Springer International Publishing. DOI PDF

Krol, L. R., Zander, T. O., Birbaumer, N. P., & Gramann, K. (2016). Neuroadaptive technology enables implicit cursor control based on medial prefrontal cortex activity. Proceedings of the National Academy of Sciences, 113(52), 14898–14903. DOI PDF

Most aspects of our mental states are not voluntarily induced: we do not will ourselves to be hungry, to be interested, or to be fascinated—it just happens. When a computer, using passive brain-computer interfacing, detects that we are hungry, interested, or fascinated, it thus has information about us that we did not explicitly communicate. It can, however, react to this information as if it were a command: it can order us lunch, or it can provide further details about whatever it is that apparently interests or fascinates us. Although we never told the computer to do these things, our brain activity somehow resulted in these things happening. This is an example of implicit control.

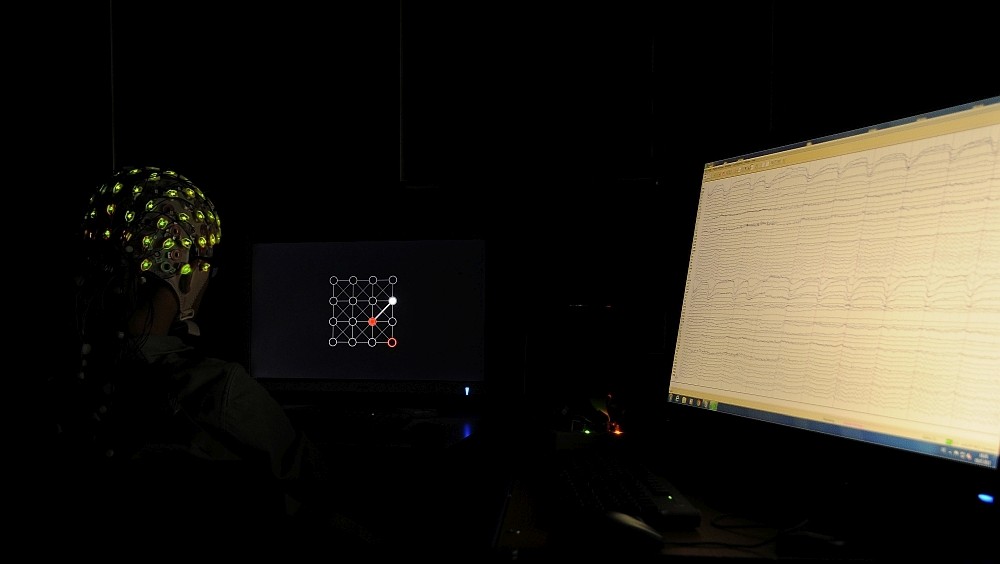

We demonstrated implicit control over a computer cursor. This task is usually highly explicit: we directly, consciously manipulate the mouse to make the cursor go where we want it to be. In our case, the cursor initially moved randomly over the nodes of a grid. Each cursor movement served as a cognitive probe. When the cursor went into a direction that brought it closer to the user's target, that user's brain responded positively. It responded negatively in the cursor went away from their selected target. We could thus use reinforcement learning to gradually steer the cursor into the desired direction.

Importantly, the participants in this experiment were not aware of having any influence over the cursor. They believed they were simply looking at it. However, simply perceiving something can be enough for your brain to cognitively process and interpret it. Our computer could detect these automatic interpretations in real time from the participants' brain activity, could co-register them against the cursor movements that elicited them, and could thus steer the cursor towards the target.

With access to our brain activity, a computer can obtain information about us without us epxlicitly communicating anything. Based on this, it can then execute certain actions to support us. Instead of explicitly instructing the computer, we can implicitly control it through our state of mind.

Further reading:

Krol, L. R., Andreessen, L. M., & Zander, T. O. (2018). Passive Brain-Computer Interfaces: A Perspective on Increased Interactivity. In C. S. Nam, A. Nijholt, & F. Lotte (Eds.), Brain-Computer Interfaces Handbook: Technological and Theoretical Advances (pp. 69-86). Boca Raton, FL, USA: CRC Press. Link PDF

Krol, L. R., Zander, T. O., Birbaumer, N. P., & Gramann, K. (2016). Neuroadaptive technology enables implicit cursor control based on medial prefrontal cortex activity. Proceedings of the National Academy of Sciences, 113(52), 14898–14903. DOI PDF

Zander, T. O., Brönstrup, J., Lorenz, R., & Krol, L. R. (2014). Towards BCI-based Implicit Control in Human-Computer Interaction. In S. H. Fairclough & K. Gilleade (Eds.), Advances in Physiological Computing (pp. 67–90). Berlin, Germany: Springer. DOI PDF

TU Berlin Press (2017). Without words: What if computers could intuitively understand us? Accessed 2018-08-01. Link

Schmidt, A. (2000). Implicit human computer interaction through context. Personal and Ubiquitous Computing, 4(2-3), 191–199. DOI

A brain-computer interface (BCI) provides you with an additional output channel, through which you can communicate with the world. Instead of using your muscles (by e.g. moving your hands or producing words), you use merely your brain activity. For example, instead of actually moving your right hand to press a button, you imagine moving your right hand, the computer detects this imagination, and interprets it as a button press.

A passive brain computer interface specifically focuses on natural brain activity—that is, brain activity that was not actually intended to communicate with the computer. Imagine you're growing tired at the end of an evening. Your computer detects this from your brain activity, dims the lights, and plays you a lullaby. You never explicitly instructed your computer to do this. You did not pretend to be tired just to get your computer to dim the lights. No, you were simply tired, and the computer responded to this state of mind. This is an example of a passive brain-computer interface application.

Passive BCI can provide computers with an additional sensor, providing information about the user's mental state. This can be used to better support that user. Effectively, computers may be able to develop a type of empathy, a better understanding of their users that goes beyond all explicitly communicated information.

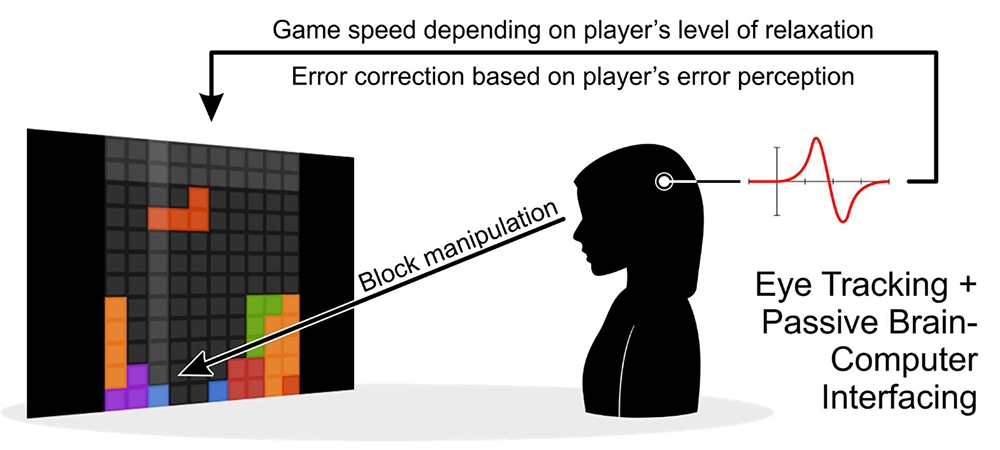

It can also enable very different interaction paradigms. Consider a game that requires you to balance different states of mind.

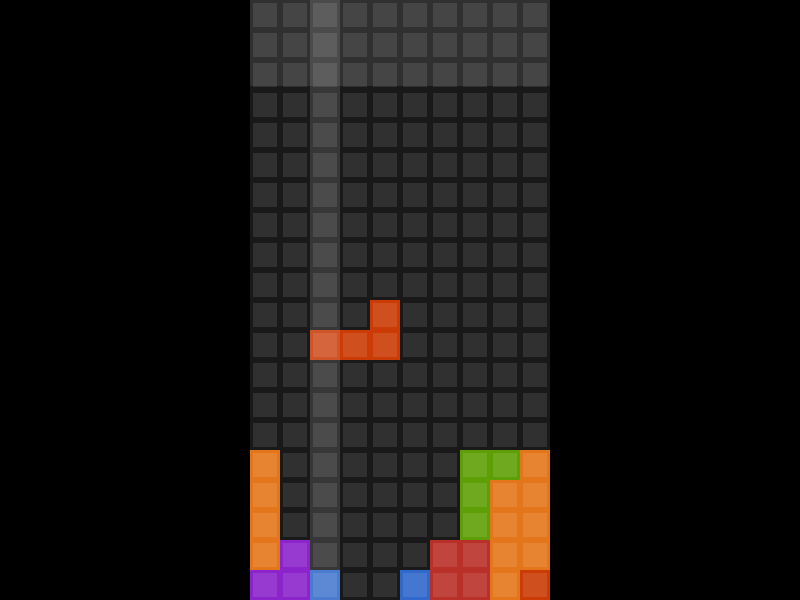

Meyendtris introduces a completely hands-free version of Tetris, using eye tracking and passive BCI to replace existing game elements and to introduce new, neuroadaptive controls. Eye tracking is used for the movement of the tetromino. Passive BCIs assess two mental states of the player to influence the game in real time. Allowing the player's brain activity to influence the game adds novel game mechanics and challenges. First, the player's state of relaxation influences game speed: the more relaxed the player, the slower the game. Don't let your mistakes upset you—the game will become more difficult! Second, if you do make mistakes, a state of error perception may be recognised by the BCI, allowing erroneously placed blocks to be automatically removed. This works best when you are properly focused on the game—don't be too relaxed!

Further reading:

Krol, L. R., Andreessen, L. M., & Zander, T. O. (2018). Passive Brain-Computer Interfaces: A Perspective on Increased Interactivity. In C. S. Nam, A. Nijholt, & F. Lotte (Eds.), Brain-Computer Interfaces Handbook: Technological and Theoretical Advances (pp. 69-86). Boca Raton, FL, USA: CRC Press. Link PDF

Krol, L. R., Freytag, S.-C., & Zander, T. O. (2017). Meyendtris: A hands-free, multimodal Tetris clone using eye tracking and passive BCI for intuitive neuroadaptive gaming. In Proceedings of the 19th ACM International Conference on Multimodal Interaction (pp. 433–437). New York, NY, USA: ACM. DOI PDF

Zander, T. O., & Krol, L. R. (2017). Team PhyPA: Brain-computer interfacing for everyday human-computer interaction. Periodica Polytechnica Electrical Engineering and Computer Science, 61(2), 209–216. DOI PDF

Zander, T. O., & Kothe, C. A. (2011). Towards passive brain-computer interfaces: applying brain-computer interface technology to human-machine systems in general. Journal of Neural Engineering, 8(2), 025005. DOI

Meyendtris: BCI+ Solutions by Brain Products

A passive brain-computer interface (BCI) focuses on natural brain activitiy, by which we mean, brain activity that was not meant as specific input to a system. In other words, natural brain activity reflects mental states that do not represent an intention to control the BCI. Relaxation and workload are such mental states.

Workload is highly relevant in the workplace. Both overload and underload can lead to critical errors. It is thus important to be able to detect workload in real-time and, if necessary, immediately adapt the workplace to match it. For example, when high workload is measured, automation levels can be increased. We have performed a number of experiments relating to the detection of workload. In particular, we have worked towards a universal workload classifier. This is a classifier that can detect workload in any person and in any context, without having been specifically calibrated for that person.

Relaxation, on the other hand, can be difficult to come by these days. One aim of the Museum of Stillness is to provide a secular place of quietude in the middle of the city, where people can find an atmosphere conducive to contemplation. To that end, a special room of stillness has been designed. During the Long Night of Museums, we worked together with the museum to demonstrate a real-time measure of "relaxation", measured from the brain activity of people sitting by themselves in the room of stillness. Participants themselves received auditory feedback: the more relaxed they were, the less wind they heard coming from speakers in the room. The audience was presented with a live feed from the room and both a scientific and an artistic visualisation of the current measurements.

The participants noted that although the real-time feedback distracted them at times, it could also help them focus their thoughts. The audience was positive. In particular, they recalled an episode where one participant appeared to be in a state of complete relaxation at all times: the visualisation indicated no deviation in the mental state towards engagement. Usually, participants were only able to hold a state of relaxation for a certain period of time, with short relapses into seeming distraction in between. After verifying that the equipment was in order, we questioned the participant, who reported being highly experienced in zazen seated meditation, which she performed during the experiment. The measurements thus accurately reflected her ability to sustain a consistent state of mind, as reflected in her electroencephalogram.

Further reading:

Krol, L. R., Andreessen, L. M., Podgorska, A., Makarov, N., & Zander, T. O. (2018). Passive Brain-Computer Interfacing in the Museum of Stillness, Proceedings of the Artistic BCI Workshop at the SIGCHI Conference on Human Factors in Computing Systems (CHI), Montréal, Canada. PDF

Krol, L. R., Andreessen, L. M., & Zander, T. O. (2018). Passive Brain-Computer Interfaces: A Perspective on Increased Interactivity. In C. S. Nam, A. Nijholt, & F. Lotte (Eds.), Brain-Computer Interfaces Handbook: Technological and Theoretical Advances (pp. 69-86). Boca Raton, FL, USA: CRC Press. Link PDF

Zander, T. O., Shetty, K., Lorenz, R., Leff, D. R., Krol, L. R., Darzi, A. W., ... Yang, G.-Z. (2017). Automated task load detection with electroencephalography: Towards passive brain-computer interfacing in robotic surgery. Journal of Medical Robotics Research, 2(1), 1750003. DOI PDF

Krol, L. R., Freytag, S.-C., Fleck, M., Gramann, K., & Zander, T. O. (2016). A task-independent workload classifier for neuroadaptive technology: Preliminary data. In 2016 IEEE International Conference on Systems Man and Cybernetics (SMC), (pp. 003171–003174). DOI PDF